A group of researchers have developed an artificial intelligence (AI) system that can protect users from undesired facial scanning by bad actors. Dubbed Chameleon, the AI model uses a special masking technology to generate a mask that hides faces in images without impacting the visual quality of the protected image. Additionally, the researchers claim that the model is resource-optimised, making it usable even with limited processing power. So far, the researchers have not gone public with the Chameleon AI model, however, they have stated their intentions to release the code publicly soon.

Researchers Unveil Chameleon AI Model

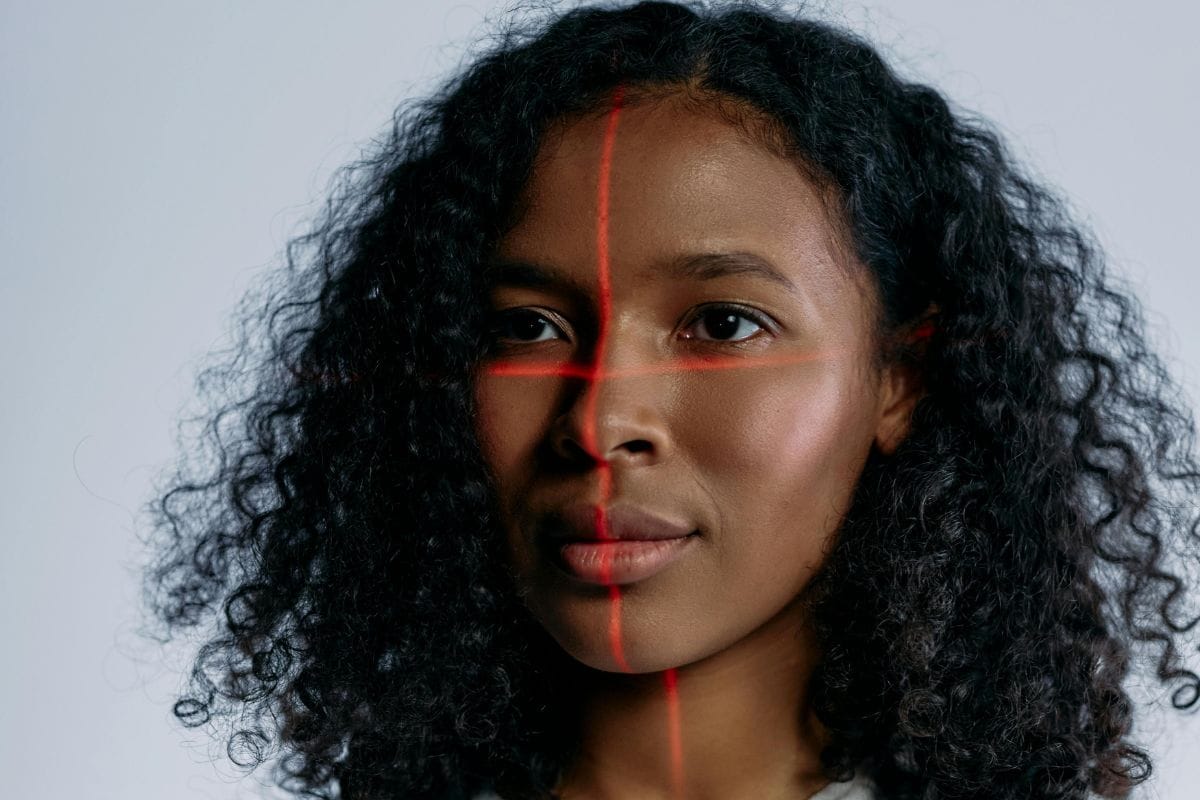

In a research paper, published in the online pre-print journal arXiv, researchers from Georgia Tech University detailed the AI model. The tool can add an invisible mask on faces in an image to make it imperceptible to facial recognition tools. This way, users can protect their identity from facial data scanning attempts by bad actors and AI data-scrapping bots.

“Privacy-preserving data sharing and analytics like Chameleon will help to advance governance and responsible adoption of AI technology and stimulate responsible science and innovation,” said Ling Liu, professor of data and intelligence-powered computing at Georgia Tech’s School of Computer Science, and the lead author of the research paper.

Chameleon uses a special masking technique called personalised privacy protection (P-3) mask. Once the mask has been applied, the images cannot be detected by facial recognition tools as the scans will show them “as being someone else.”

While face masking tools already exist, the Chameleon AI model innovates on both resource optimisation and image quality perseverance. To achieve the former, the researchers highlighted that instead of using separate masks for each photo, the tool generates one mask per user based on a few user-submitted facial photos. This way, only a limited amount of processing power is required to generate the invisible mask.

The second challenge, which is to preserve the image quality of a protected photo, was trickier. To solve this, researchers used a perceptibility optimisation technique in Chameleon. It automatically renders the mask without any manual intervention or parameter setting, thus allowing the AI to not obfuscate the overall image quality.

Calling the AI model an important step towards privacy protection, the researchers revealed that they plan to release Chameleon’s code publicly on GitHub soon. The open-sourced AI model can then be used by developers to build into applications.