Google’s patent document shows the adaptive assistant in action (tap to expand)

Photo Credit: WIPO/ Google

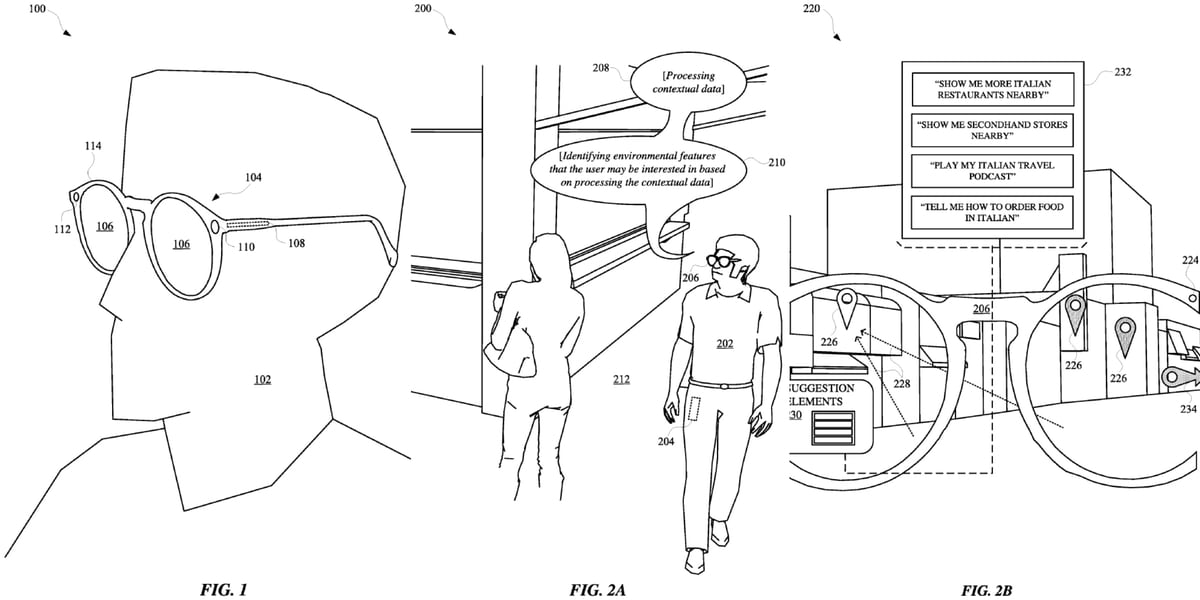

The company says that the automated assistant would be able to render suggestions on the display of the smart glasses, and that users will be able to select these options with technology that tracks a user’s “gaze”. This suggests that the device would be equipped with some form of eye tracking that could be used by the assistant.

When a user looks somewhere else, the assistant would be able to use the camera and microphone on the smart glasses to “adapt” its suggestions dynamically, based on the user’s point of view and their verbal instructions, respectively.

Google provides an example of the user wearing the glasses, while exploring a foreign city. The device would provide suggestions for restaurants based on the direction of their gaze (Fig. 2B). Users would be able to invoke the assistant by tapping the glasses or using a wake phrase.

According to the document, the assistant would also limit the number of suggestions seen on the smart glasses’ display, as too many of the suggestions could obstruct the wearer’s view. These suggestions can be selected based on gestures, or using a spoken command. The patent also suggests that the assistant could interface with other applications on the device.

The company also describes the ability to “offload computational tasks” to a server device that would enable the smart glasses to “conserve computational resources” — effectively providing additional battery life. This means that the assistant could be hosted on the server or the smart glasses, while processes related to the assistant’s operations could take place on either device.